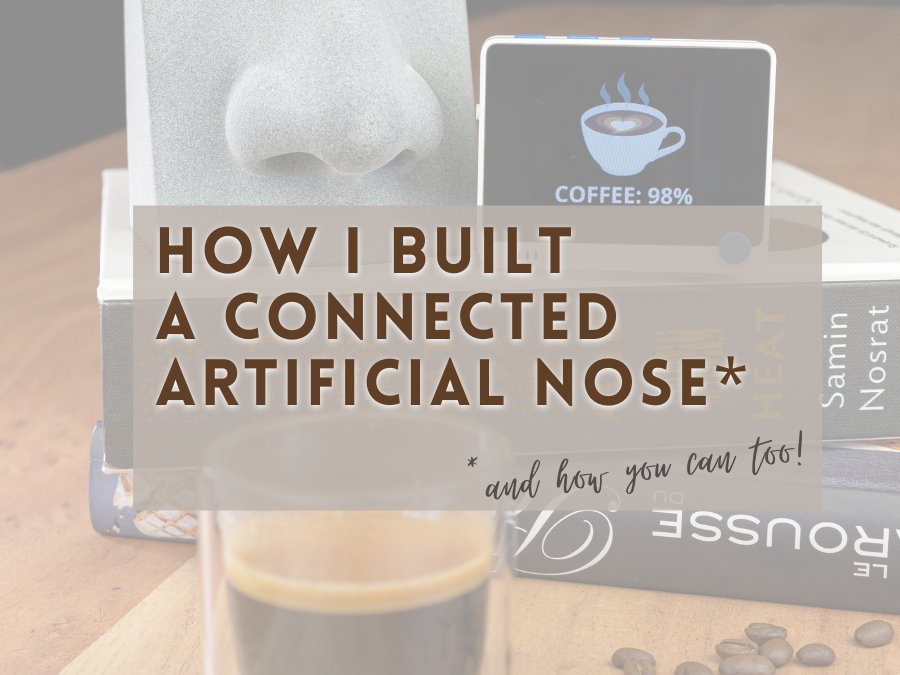

Over the past few months, I have worked on a pretty cool project that some of you might have already heard about as it sort of went viral. I built a DIY, general-purpose, artificial nose that can smell virtually anything you teach it to recognize!

It is powered by the Wio Terminal (an Arduino-compatible prototyping platform), a super affordable electronic gas sensor, and a TinyML neural network that I trained using the free online tool Edge Impulse.

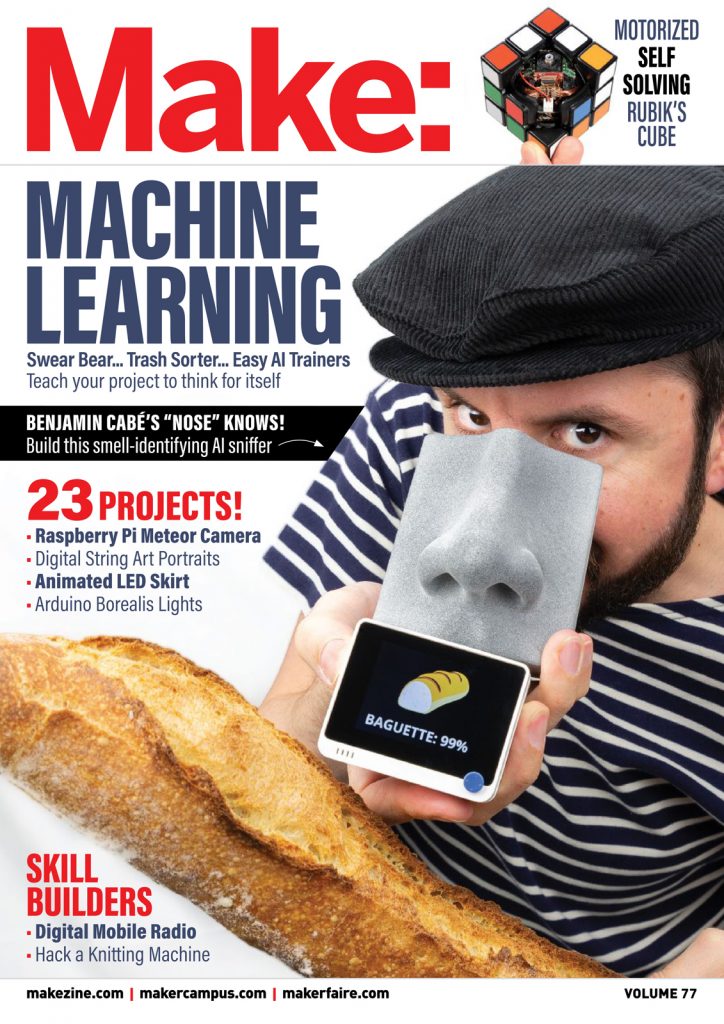

The project was recently featured on the cover of Make: Magazine, and I encourage you to check out the article I wrote for them before reading further.

The Make: Magazine article covers a lot about how you can build the artificial nose for yourself, so I want to use this blog post to dive deeper into why this project is so important to me. In particular, I want to share with you how it helped me understand more about AI than I’d ever thought, and how I eventually ended up connecting the “nose” to an IoT platform (namely, Azure IoT).

[toc heading_levels=”1,2,3″]

Making Neural Networks Tangible

Despite being passionate about all things software, Machine Learning (ML) has always been a field that’s eluded me, perhaps because it tends to be too abstract and too much maths for my visual brain?

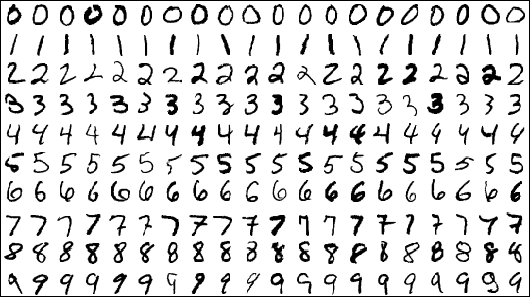

Sample images from the MNIST test dataset.

Speaking of visual things, every time I have tried to open a book promising to be an introduction to ML, most of the introductory examples involved image classification (ex. automatically recognizing handwritten digits from the MNIST database). And, sadly, those innocent pixels would be anything but visual to me, as they would quickly turn into abstract matrices.

So when I started to think of implementing an artificial nose, I didn’t initially approach it as a Machine Learning problem. Instead, I tried to use my intuition: “What characterizes a smell?”. And my intuition was telling me that somehow I needed to establish a correlation between the concentration of the various gasses measured by the gas sensor (carbon monoxide, ethyl alcohol, etc.), and the associated smell. However, doing a simple read of the gasses concentration at a given point in time would probably not cut it: how would it make the difference between a really strong alcohol smell, and one that was maybe more volatile?

Quickly, I realized that acquiring a couple seconds of sensor data would probably be just enough to “capture” the olfactory fingerprint of each smell. With these few seconds of sensor data, I could look at the variation (min, max, average, etc.) of the concentration of each gas, and this would hopefully characterize each smell.

It turns out that once I had extracted those characteristics—something that I can now refer to as feature extraction, like the AI grown-ups, and which was really easy to do using the Edge Impulse tool suite—all that was left was to effectively establish the correlation between them and the expected smells. However, I didn’t really know what kind of neural network architecture I would need, let alone what a neural network was anyway. So, once again, I leveraged the Edge Impulse environment.

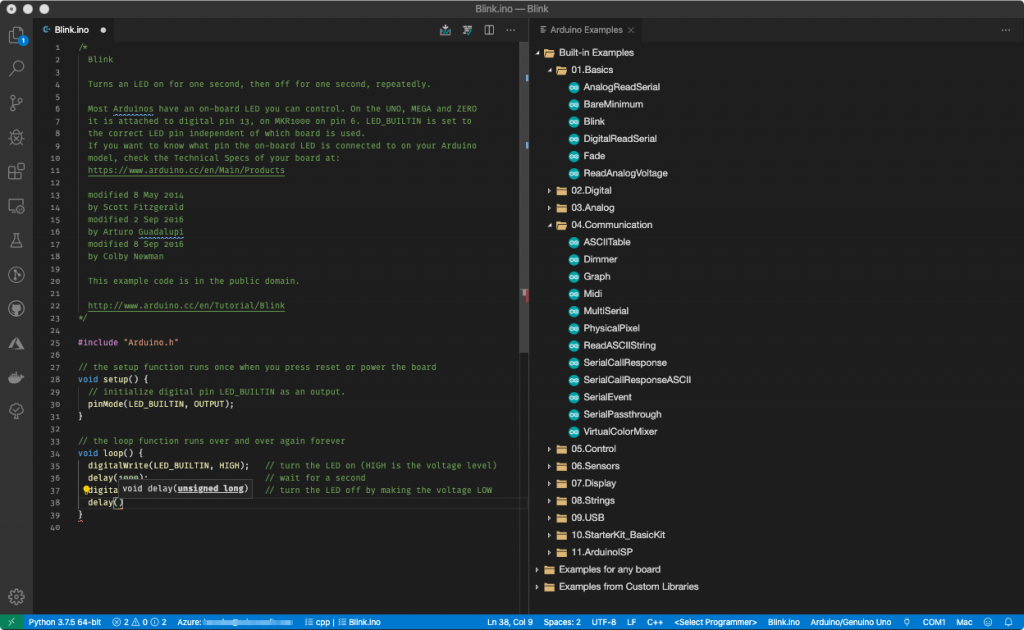

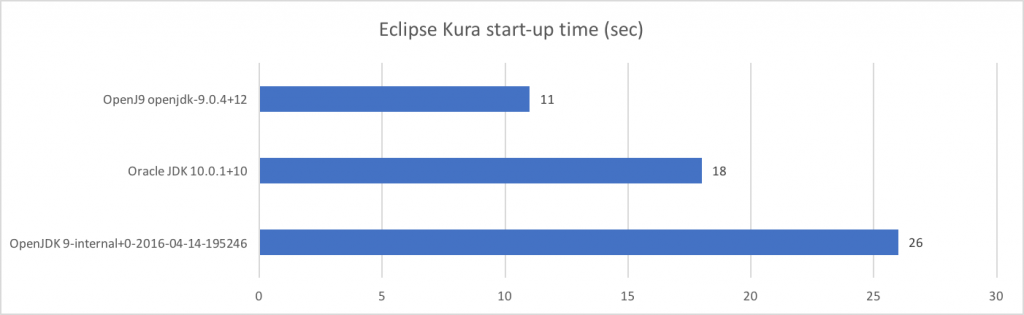

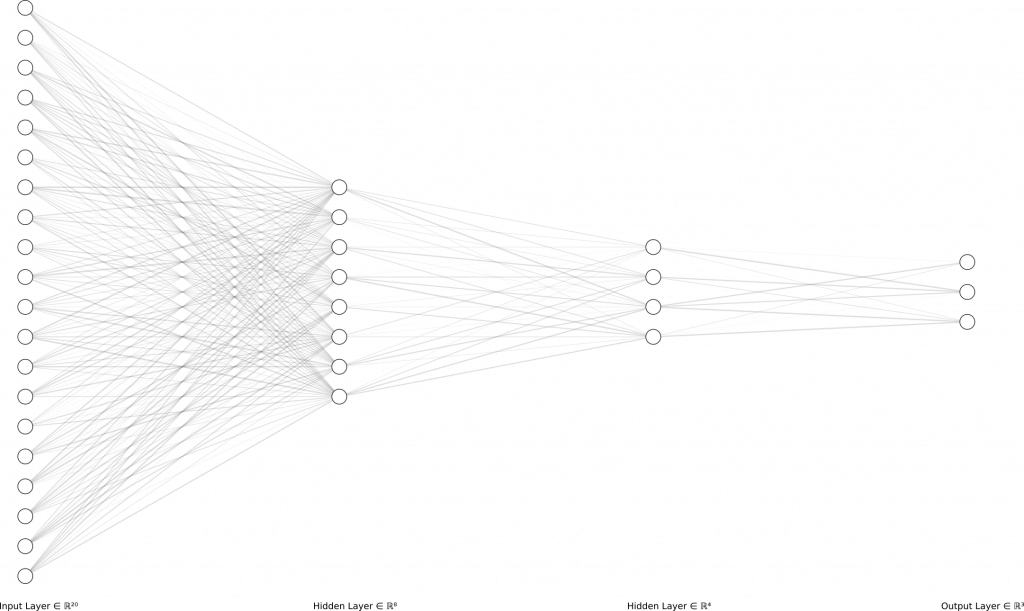

It turns out the kind of classification problem I was looking at was reasonably simple: given the minimum/maximum/average/… concentration of each gas on a given time period (I found 1.5s to be the sweet spot), what is the predicted smell? And one simple way to “solve” that equation, is to use a so-called fully-connected neural network, like you see below.

During the training phase, the training data represents the ground truth (ex. “This is 100% coffee!”) and is used to tweak the parameters of the equation—the weights of the neurons—based on how much each characteristic (ex. the average concentration of NO2) accrues to each smell.

Once the model has been trained, and during the inference phase, a given input/olfactory fingerprint entering the network (left-hand side of the diagram), ends up being “routed” to the appropriate output bucket (right-hand side). effectively giving a prediction about what smell it corresponds to.

Building an actual nose

When I initially shared my project on social media back in May last year, I quickly realized lots of people were interested in it.

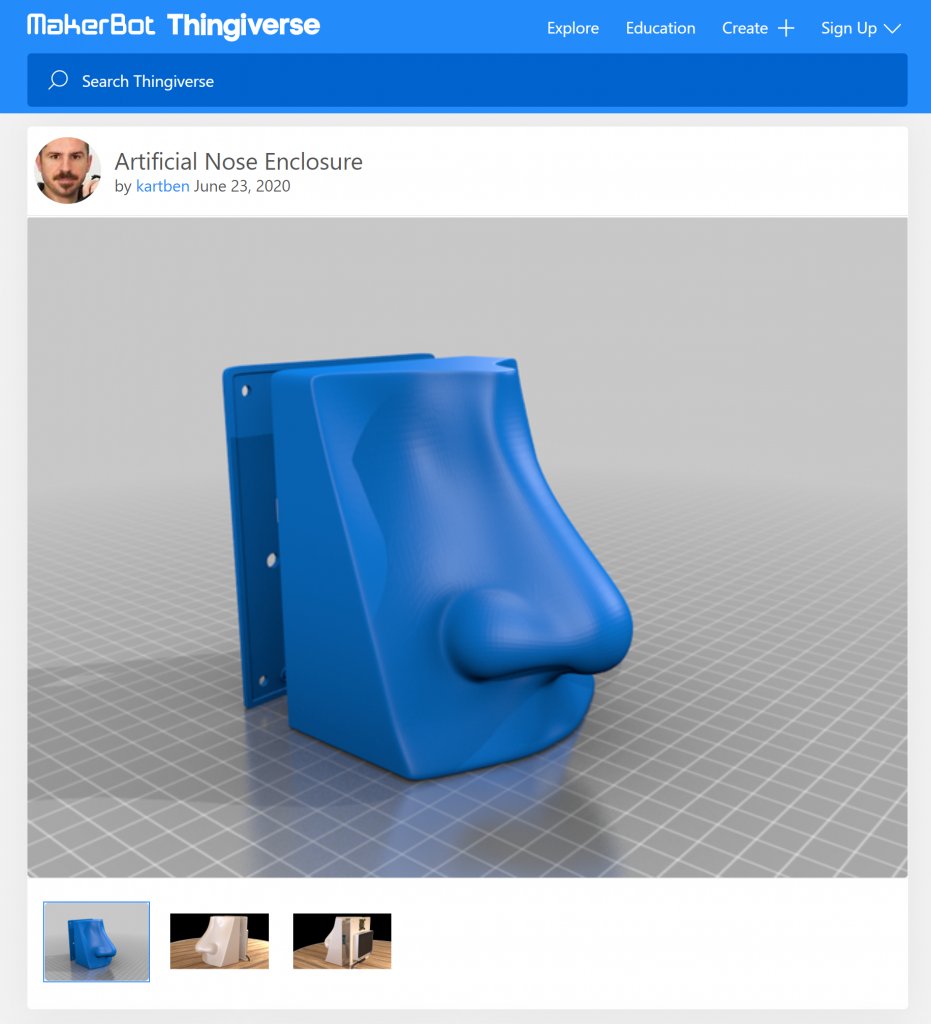

This motivated me to go further and to turn my initial prototype into an actual nose! I had never done that before, so I ended up teaching myself how to use 3D CAD software so that I could design an actual enclosure for my device. I picked Blender—which I would not recommend for pure CAD stuff as there are better alternatives out there, ex. TinkerCAD—, and 3D-printed the resulting plastic enclosure.

Turning the nose into an IoT device

An interesting aspect of TinyML is that it enables scenarios where your low-power, constrained, microcontroller-based equipment is completely autonomous when it comes to performing machine learning inference (ex. guessing a smell). It is very powerful, as it means your sensor data never has to leave your device and you don’t need to rely on any sort of cloud-based AI service. But on the other hand, it also means that your smart device might not be so smart if it ends up living in its own echo chamber, right?

At the heart of an IoT solution is often the “thing” itself, and it makes a lot of sense to design it to be as smart as possible for there are many reasons why relying on any form of network communication or cloud-based processing is at best impractical, sometimes plain impossible.

Connecting the Artificial Nose to Azure IoT Central

The Artificial Nose is effectively an IoT Plug and Play device.

As soon I was happy with how it performed at smelling things, and once I had completed the development of the graphical user interface, I did use the Azure IoT SDK (and some of the work I had done last year) to enable the nose to talk to the Azure IoT services.

It means you can very easily connect the device to Azure IoT Central (using the Wio Terminal’s Wi-Fi module), and get access to gas sensor data telemetry in near-realtime, see what the device is smelling, etc.

More importantly, you can automatically trigger rules when, for example, a bad smell is being detected, therefore allowing the nose to be much smarter than if it were just a standalone, offline, device.

If you built the artificial nose for yourself—and I hope many of you will consider doing so!—here are the simple steps for you to connect it to Azure IoT Central:

- First, make sure that your Wio Terminal is running an up-to-date WiFi firmware by following these instructions ;

- Create a new Azure IoT Central application (if you already have one you want to use, that works too!) ;

- In the Administration section of the IoT Central application, look for the Device Connection menu.

- Open the SAS-IoT-Devices enrollment group and take note of the following credentials that you will need to connect your AI nose(s):

- ID Scope

- SAS Primary Key

- Flash the Wio Terminal with the latest Artificial Nose firmware (or deploy your own custom build) ;

- While the Wio Terminal is powered, keep the three buttons (A, B, C) at the top pressed, and slide the reset button. The device should now be showing a black screen ;

- Connect to the Wio Terminal over serial and check that it’s running the configuration prompt by typing help, which should show you the list of supported commands. Type the following commands to configure the WiFi connection and the Azure IoT credentials

- set_wifissid <your_wifi_ssid>

- set_wifipwd <your_wifi_password>

- set_az_iotc <id_scope> <sas_primary_key> <device_id> (id_scope and sas_primary_key as per earlier, and device_id being the ID you want to give your device in Azure IoT Central)

- Reset the Wio Terminal, and voila! You should now see a new device popping up in the Devices section of your IoT Central application.

Digital Twins meet virtual senses

Like I mentioned above, having the nose talking to an IoT platform enables scenarios where e.g. you trigger an alert when a bad smell is being picked up. But what is a bad smell anyway? This might depend on a lot of different factors, just like the final destination for the actual alert might be highly dynamic.

Let me try to illustrate this with an example of a real estate cleaning company in charge of buildings all around the city of Chicago. Their information system already allows them to keep track of their personnel and associated cleaning schedules, but in a pretty static way: cleaning people are going to their assigned location once a day, no matter what. From time to time, it turns out that the location doesn’t really require urgent cleaning (hello, COVID-19 and slow office spaces!), in which case the cleaning staff would have been better off going to a place that actually required servicing.

Beyond the apparent buzzword, the concept of Digital Twins consists in nothing more than augmenting the information system (staff directory, building inventory, cleaning schedules, etc.) and overall knowledge graph of the cleaning company with entities that correspond to physical, connected, assets.

With that in mind, a mere “it doesn’t smell so good in here” signal sent by a sniffing device sitting in an office building can immediately be contextualized, and appropriate actions can be taken. Based on where the device is effectively located, it becomes easy to figure out who is the person responsible for cleaning that space on that particular day, and to notify them accordingly.

Get started today!

Many people have already started to build the device for themselves and to experiment what adding “virtual smell” to their devices and applications could mean. If this blog post inspired you to join them, I will leave you with the only two links that you really need to get started:

- You can get your artificial nose hardware kit directly from Seeed Studio.

- You can find the source code, 3D files for the nose enclosure, initial AI model, etc. on my GitHub repository.

artificial-nose (this link opens in a new window) by kartben (this link opens in a new window)

Instructions, source code, and misc. resources needed for building a Tiny ML-powered artificial nose.

If you enjoyed this article, don’t forget to subscribe to this blog to be notified of upcoming publications! And of course, you can also always find me on Twitter and Mastodon.