For the past few weeks, I’ve been spending some time digging into what some people call AIoT, Artificial Intelligence of Things. As often in the vast field of the Internet of Things, a lot of the technology that is powering it is not new. For example, the term Machine Learning actually dates back to 1959(!), and surely we didn’t wait for IoT to become a thing to connect devices to the Internet, right?

In the next few blog posts, I want to share part of my journey into AIoT, and in particular I will try to help you understand how you can:

- Quickly and efficiently train an AI model that uses sensor data ;

- Run an AI model with very limited processing power (think MCU) ;

- Remotely operate your TinyML* solution, i.e. evolve from AI to AIoT.

* TinyML: the ability to run a neural network model at an energy cost of below 1 mW.

Pete Warden (@petewarden),

TinyML: Machine Learning with TensorFlow Lite on Arduino and Ultra-Low-Power Microcontrollers

Simplifying data capture and model training

According to Wikipedia, supervised learning is the machine learning task of learning a function that maps an input to an output based on example input-output pairs.

As an example, you may want to use input data in the form of vibration information (that you can measure using, for example, an accelerometer) to predict when a bearing is starting to wear out.

You will build a model (think: a mathematical function on steroids!) that will be able to look at say 1 second of vibration information (the input) and tell you what the vibration corresponds to (the output – for example: “bearing OK” / “bearing worn out”). For your model to be accurate, you will “teach” it how to best correlate the inputs to the outputs, by providing it with a training dataset. For this example, this would be a few minutes/hours worth of vibration data, together with the associated label (i.e., the expected outcome).

Adding some AI into your IoT project will often follow a similar pattern:

- Capture and label sensor data coming from your actual “thing” ;

- Design a neural network classifier, including the steps potentially needed to process the signal (ex. filter, extract frequency characteristics, etc.) ;

- Train and test a model ;

- Export a model to use it in your application.

All those steps might not be anything out of the ordinary for people with a background in data science, but for a vast majority—including yours truly!—this is just too big of a task. Luckily, there are quite a few great tools out there that can help you get from zero to having a pretty good model, even if you have close to zero skills in neural networks!

Enter Edge Impulse. Edge Impulse provides a pretty complete set of tools and libraries that provides a user-friendly (read: no need to be a data scientist) way to:

They have great tutorials based on an STM32 developer kit, but since I didn’t have one at hand when initially looking at their solution, I created a quick tool for capturing accelerometer and gyroscope data from my MXCHIP AZ3166 Developer Kit.

In order to build an accurate model, you will want to acquire tons of data points. As IoT devices are often pretty constrained, you often need to be a bit creative in order to capture this data, as it’s likely your device won’t let you simply store megabytes worth of data on it, so you’ll need to somehow offload some of the data collection.

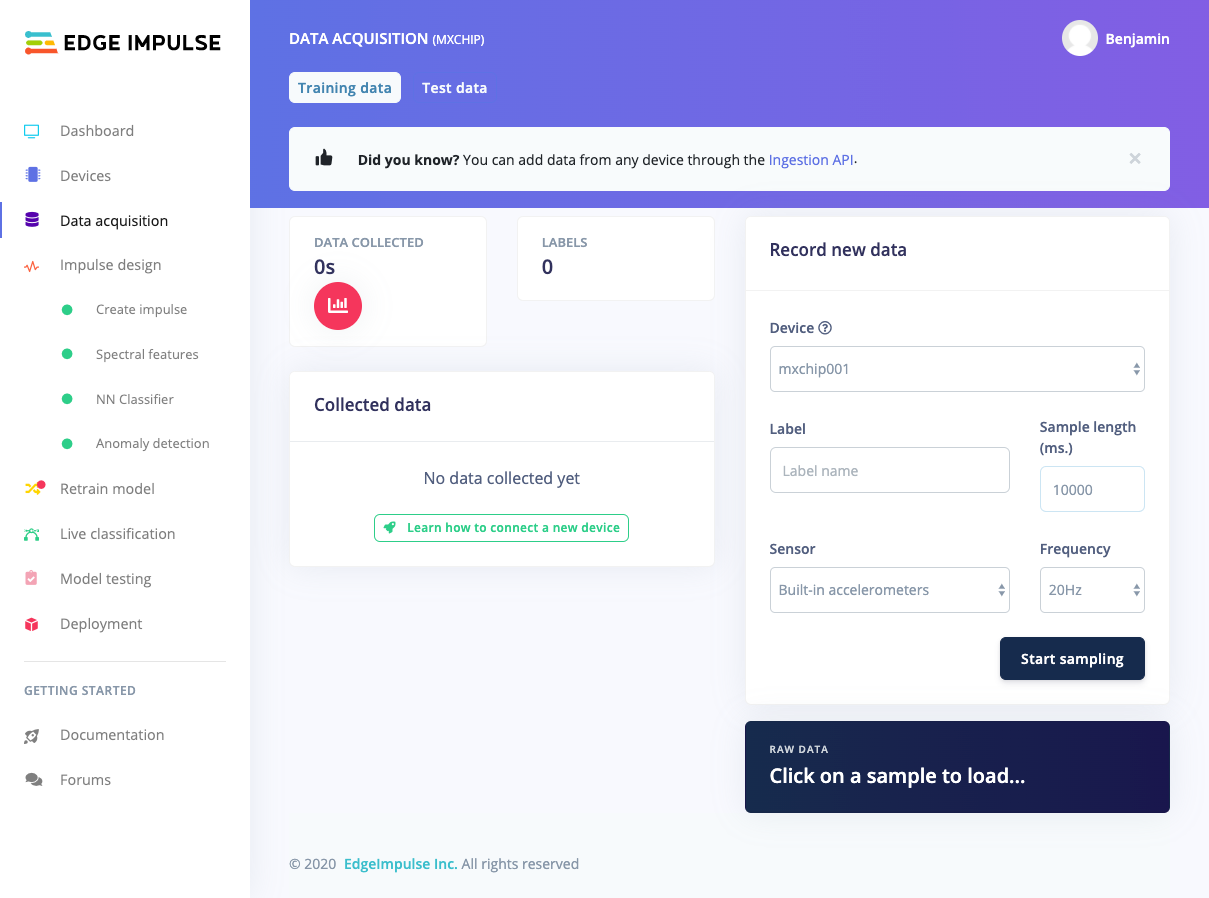

Edge Impulse exposes a set of APIs to minimize the number of manual steps needed to acquire the data you need to train your model:

- The ingestion service is used to send new device data to Edge Impulse ;

- The remote management service provides a way to remotely trigger the acquisition of data from a device.

As indicated in the Edge Impulse documentation, “devices can either connect directly to the remote management service over a WebSocket, or can connect through a proxy”. The WebSocket-based remote management protocol is not incredibly complex, but porting it on your IoT device might be overkill when in fact it is likely that you can simply use your computer as a proxy that will, on the one hand, receive sensor data from your IoT device, and on the other hand communicate with the Edge Impulse backend.

So how does it work in practice should you want to capture and label sensor data coming from your MXChip developer kit?

Custom MXChip firmware

You can directly head over to this GitHub repo and download a ready-to-use firmware that you can directly copy to your MXChip devkit. As soon as you have this firmware installed on your MXChip, its only purpose in life will be to dump on its serial interface the raw values acquired from its accelerometer and gyroscope sensors as fast as possible (~150 Hz). If you were to look at the serial output from your MXChip, you’d see tons of traces similar to this:

…

[67,-24,1031,1820,-2800,-70]

[68,-24,1030,1820,-2730,-70]

[68,-24,1030,1820,-2730,-70]

[68,-23,1030,1820,-2730,-70]

[68,-24,1030,1820,-2800,-70]

[68,-24,1030,1820,-2800,-70]

[68,-24,1031,1820,-2800,-70]

[69,-22,1030,1820,-2730,-70]

[69,-22,1030,1820,-2800,-70]

…

There would probably be tons of better options to expose the sensor data over serial more elegantly or efficiently (ex. Firmata, binary encoding such as CBOR, etc.), but I settled on something quick 🙂.

Serial bridge to Edge Impulse

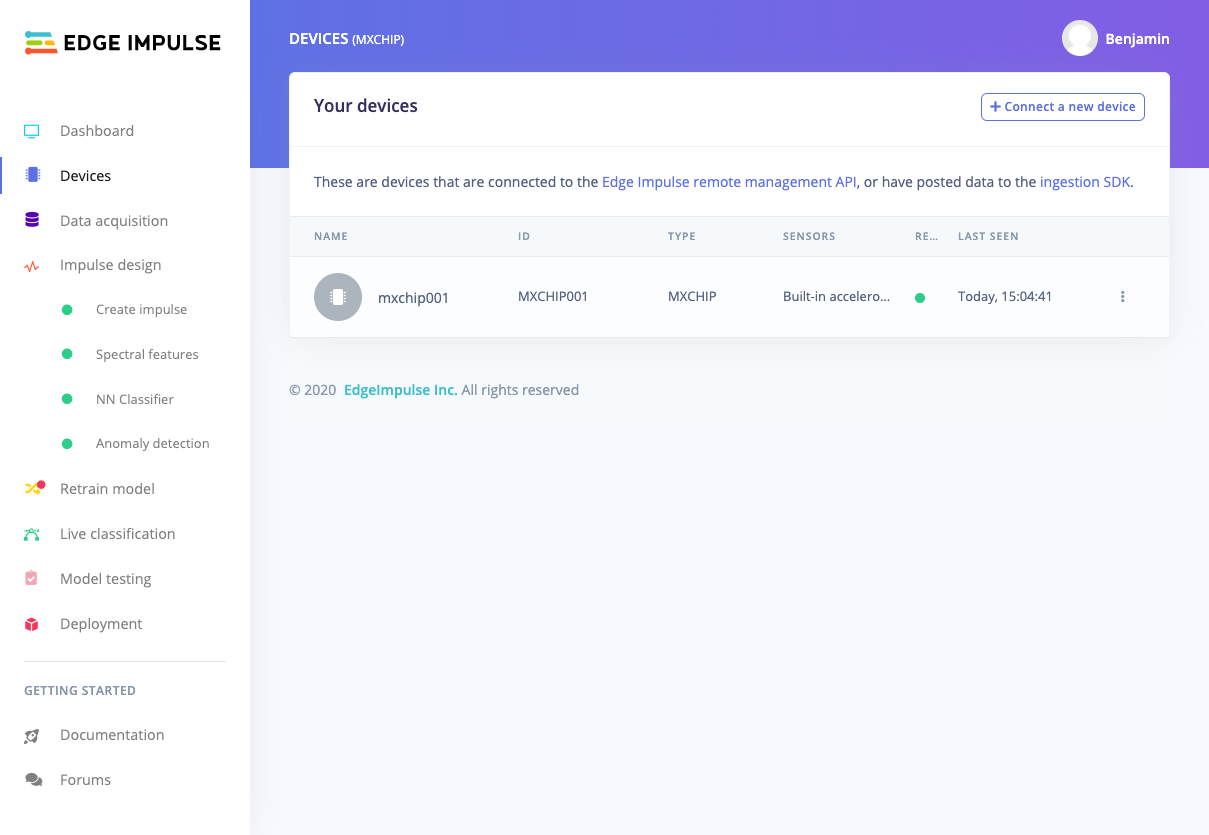

To quickly feed sensor data into Edge Impulse, I’ve developed a very simple Node.js app that reads input from the serial port on the one hand and talks to the Edge Impulse remote management API on the other. As soon as you install and start the bridge (and assuming, of course, that you have an MXChip connected to your machine), you’ll be able to remotely trigger the acquisition of sensor data right from the Edge Impulse portal. You will need to create an Edge Impulse account and project.

npm install serial-edgeimpulse-remotemanager -g

The tool should be configured using the following environment variables:

EI_APIKEY: EdgeImpulse API key (ex. ei_e48a5402eb9ebeca5f2806447218a8765196f31ca0df798a6aa393b7165fad5fe’) for your project ;EI_HMACKEY: EdgeImpulse HMAC key (ex. ‘f9ef9527860b28630245d3ef2020bd2f’) for your project ;EI_DEVICETYPE: EdgeImpulse Device Type (ex. ‘MXChip’) ;EI_DEVICEID: EdgeImpulse Device ID (ex. ‘mxchip001’) ;SERIAL_PORT: Serial port (ex: ‘COM3’, ‘/dev/tty.usbmodem142303’, …).

Once all the environment variables have been set (you may declare them in a .env file), you can run the tool:

serial-edgeimpulse-remotemanager

From that point, your MXChip device will be accessible in your Edge Impulse project.

You can now very easily start capturing and labeling data, build & train a model based on this data, and even test the accuracy of your model once you’ve actually trained it.

In fact, let’s check the end-to-end experience with the video tutorial below.

TensorFlow on an MCU?!

Now that we’ve trained a model that turns sensor data into meaningful insights, we’ll see in a future article how to run that very model directly on the MXChip. You didn’t think we were training that model just for fun, did you?

Don’t forget to subscribe to be notified when this follow-up article (as well as future ones!) comes out.

3 replies on “Quickly train your AI model with MXChip IoT DevKit & Edge Impulse”

Great write up! Could this work with a esp32 + accelerometer and an android phone?

I don’t see why not! FWIW since I wrote this post Edge Impulse actually added direct support for mobile using WebAssembly. See https://docs.edgeimpulse.com/docs/through-webassembly

Hi Benjamin. Thank you for your article. Is it possible to capture only the accelerometer or gyroscope separately? I am not familiar with bin files and I assume that the .ino file cannot be changed in this file. Could you help me with how to do that? Can I just change the .ino file?